AR Hover Navigation

Multidimensional Pathways within Harvard Yard

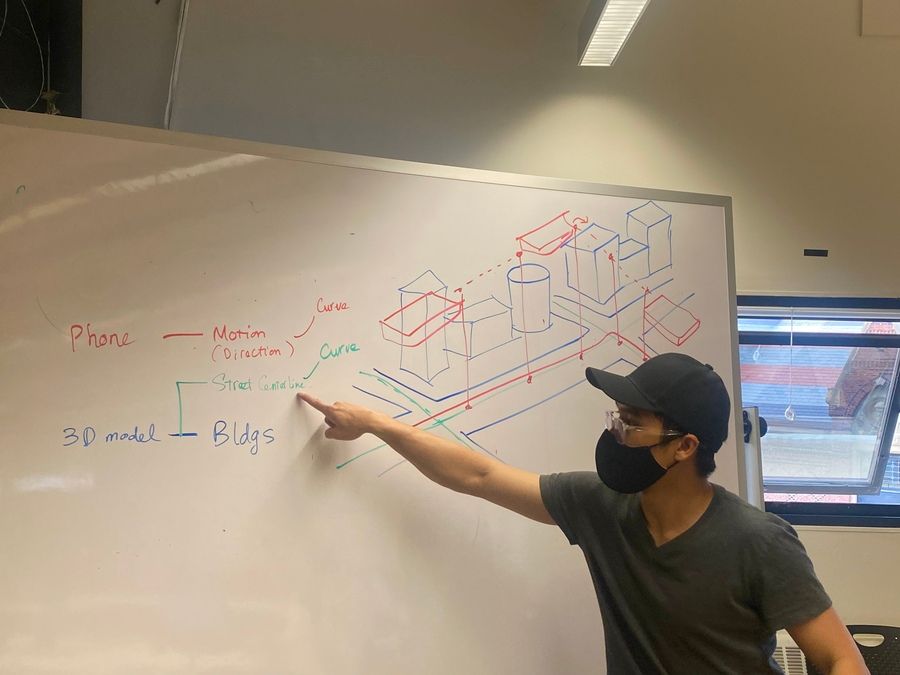

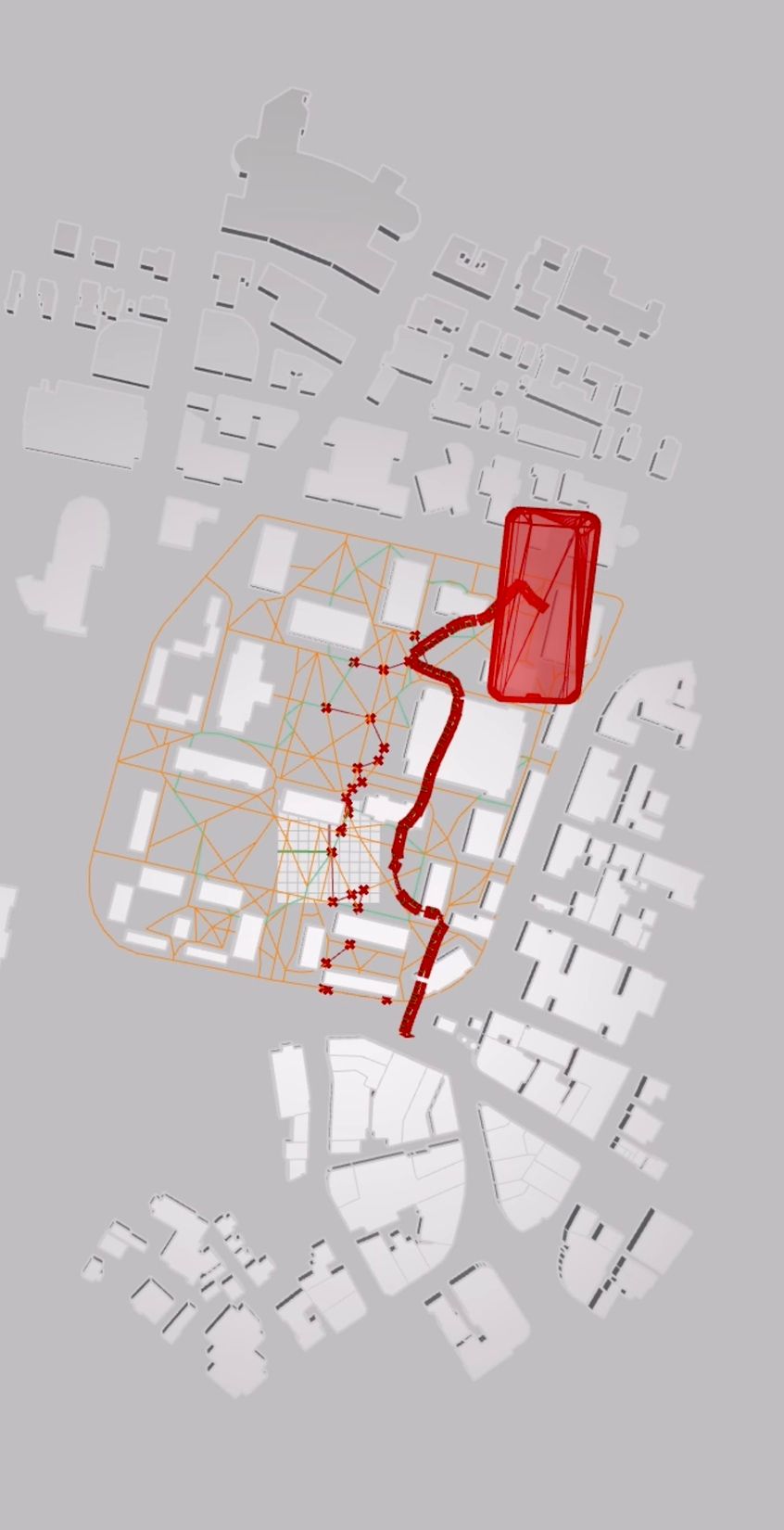

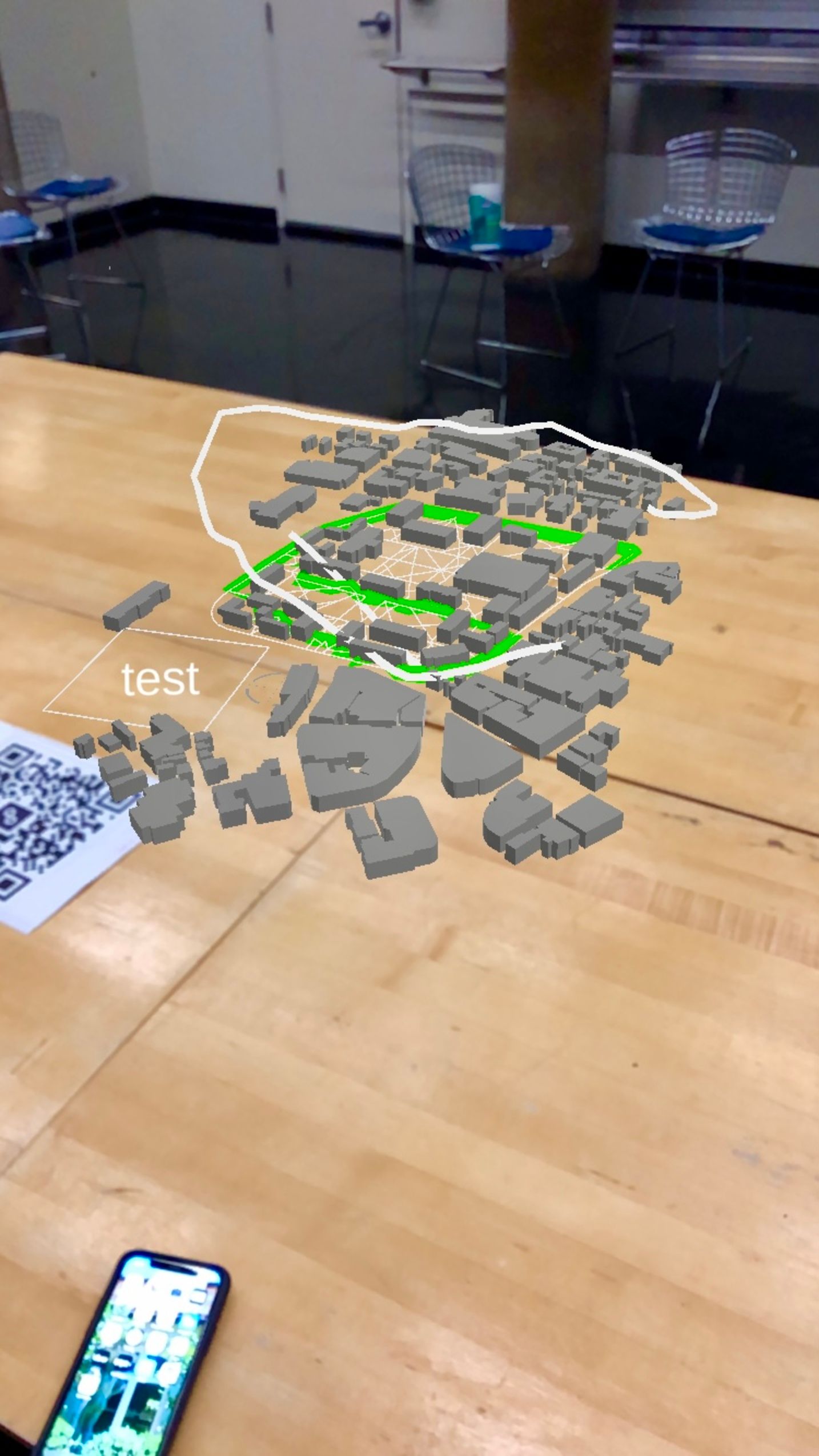

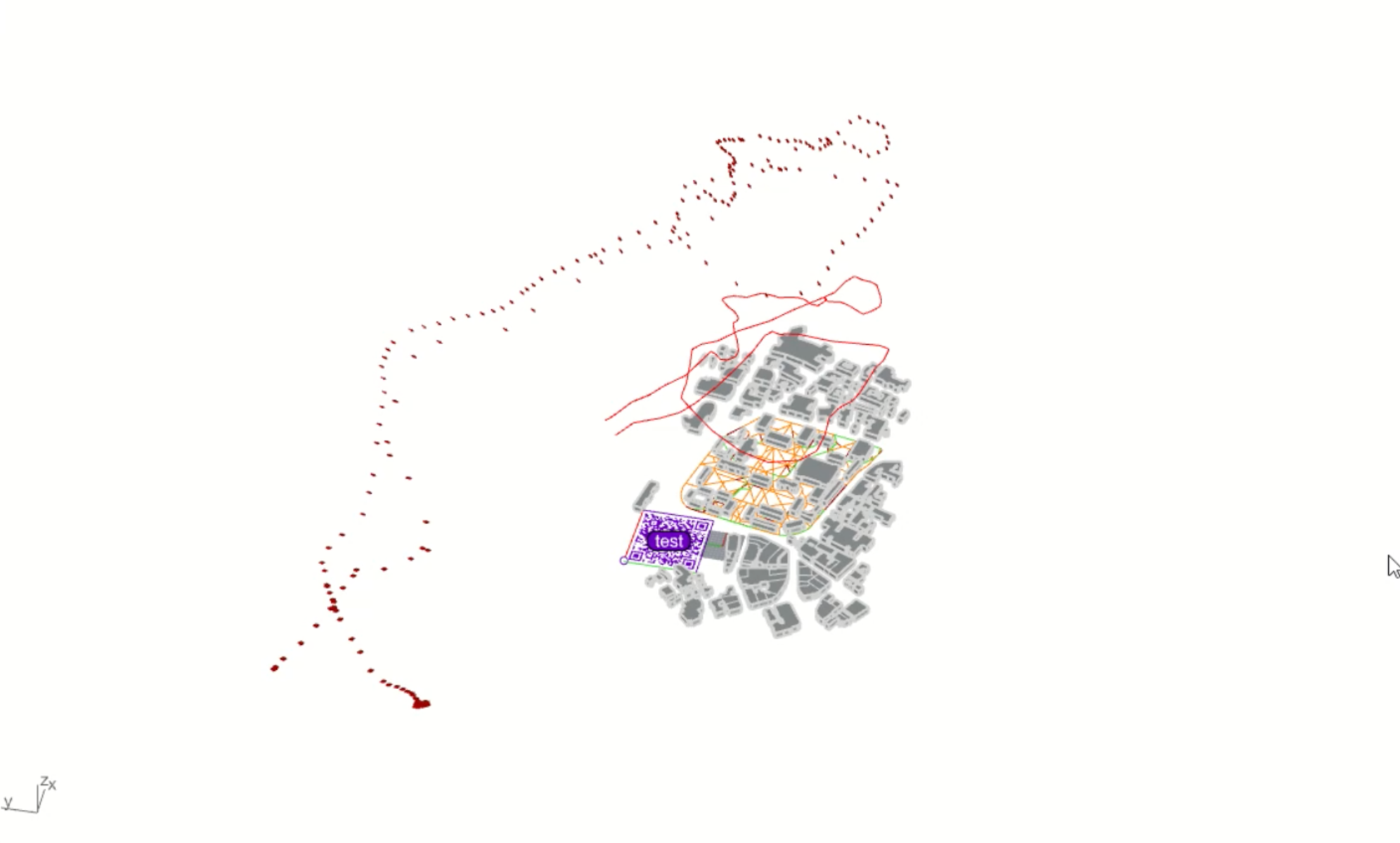

For our project, AR Hover Navigation, we aimed to record one's motion in traversing a path across 3D AR space to aid the lost traveler along their route and provide a multidimensional view of their journey. Using Rhino's Fologram plug-in as well as corresponding C# for Fologram components we decided to track out motion through the Fologram Rhino plug-in to get a more realistic depiction of spatial cognition as one is navigating Harvard Yard. It should be noted here that we adjusted Xiaoshi's original code to fit our particular use case.

3D data of Harvard Yard was scraped using the Google Maps API and then brought into Blender so that the geometry of extracted sample of Harvard Yard could be normalized with centerline data preserved. In doing this, those using the Fologram navigation tracker could best mimic movement within this 3D AR environment. The shape of the traveler's movement is tracked in augmented reality and displayed through the Fologram Rhino interface. In providing an augmented reality format by which to display motion one can avoid losing their way and appreciate their path in a novel shape and perspective. In our next steps for this project we will attempt to add Unreal Engine's "metahumans" to the tracked movement through the 3D environment to provide a further level of reality to the movement of the user along their path.